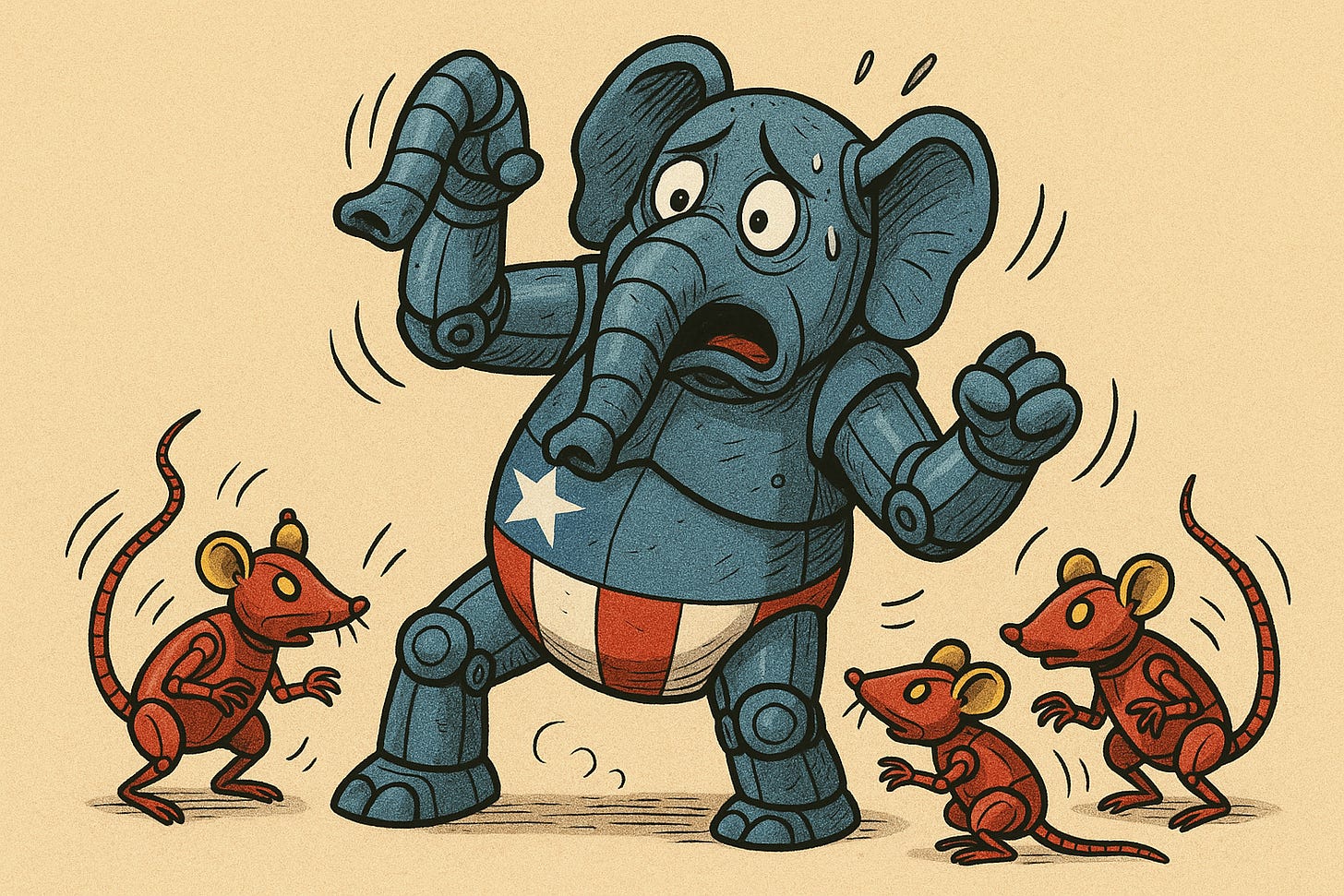

Too Big to Secure

Why China’s smaller, sovereign AI may outlast America’s hyperscale dreams

Two very different philosophies are shaping the future of artificial intelligence—and the chasm is widening fast.

Recent assessments from CFR and allied strategic research centres illuminate a deepening divergence: America’s hyperscaler paradigm versus China’s sovereign localisation approach. An analysis by Vinh Nguyen, CFR’s senior fellow for artificial…